QAL - Quality-Aware Loss for 3D Reconstruction

A Loss for Recall–Precision Balance in 3D Reconstruction. Quality-Aware Loss for robust 3D vision and robotics.

Abstract

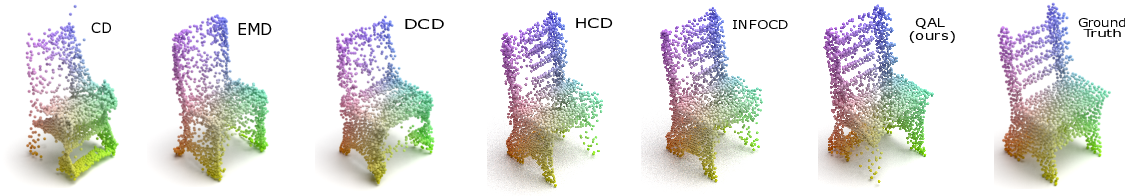

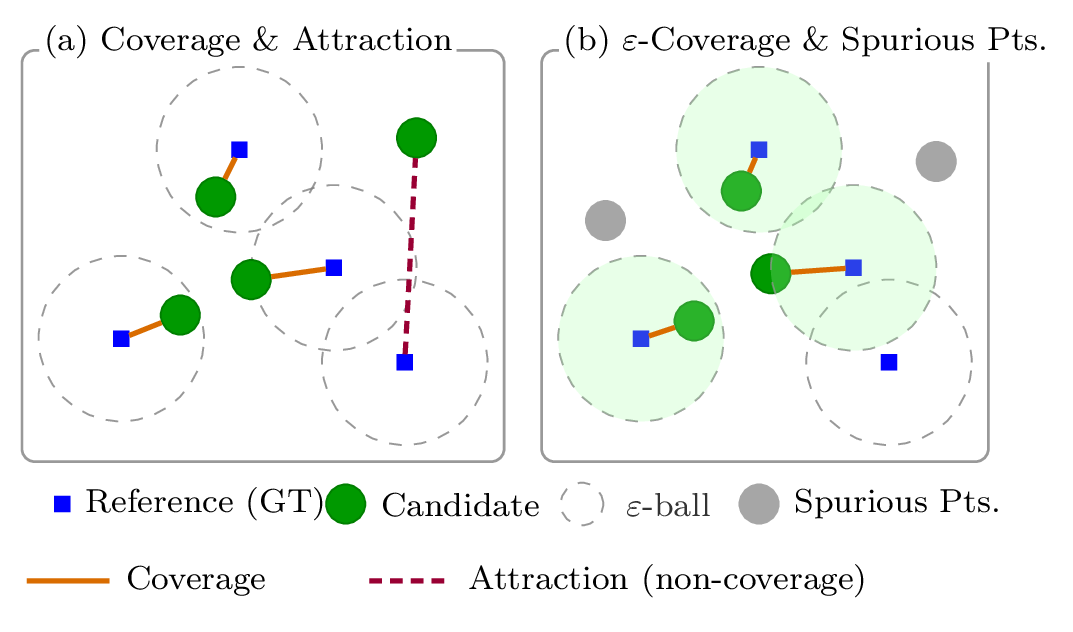

Volumetric learning underpins many 3D vision tasks such as completion, reconstruction, and mesh generation, yet training objectives still rely on Chamfer Distance (CD) or Earth Mover’s Distance (EMD), which fail to balance recall and precision. We propose Quality-Aware Loss (QAL), a drop-in replacement for CD/EMD that combines a coverage-weighted nearest-neighbor term with an uncovered–ground-truth attraction term, explicitly decoupling recall and precision into tunable components.

Across diverse pipelines, QAL achieves consistent coverage gains, improving by an average of +4.3 points over CD and +2.8 points over the best existing alternatives. Though modest in percentage, these gains reliably recover thin structures and under-represented regions that CD/EMD overlook. Extensive ablations confirm stable performance across hyperparameters and output resolutions, while full retraining on PCN and ShapeNet demonstrates generalization across datasets and backbones. Moreover, QAL-trained completions yield higher grasp scores under GraspNet evaluation, showing that improved coverage translates directly into more reliable robotic manipulation.

QAL thus offers a principled, interpretable, and practical objective for robust 3D vision and safety-critical robotics pipelines.

Links

- Project Website: https://droneslab.github.io/qal/

- Paper: Available on arXiv